AREA Requirements Committee Advances Work at F2F Meeting

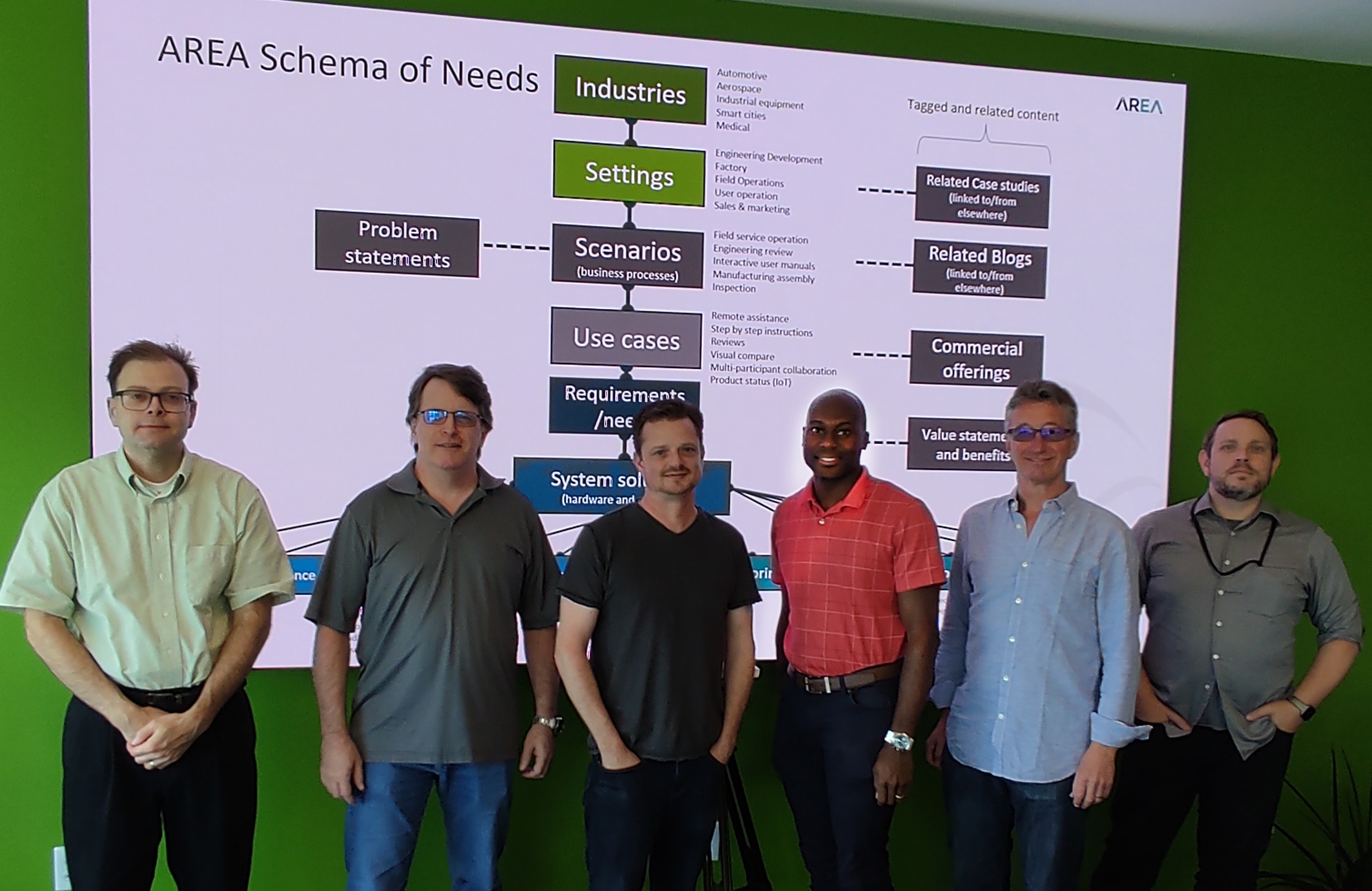

The future success of enterprise AR depends on vendors and enterprises having a shared understanding of the hardware, software, and use case requirements for each type of AR solution. Establishing those requirements is the work of the AREA Requirements Committee – and on August 11th, the group convened in Boston for two days of face-to-face meetings to advance their work.

Requirements are essential because they enable enterprises to evaluate what they need to implement an AR solution. At the same time, requirements provide AR hardware and software developers with the input they need to build products that fulfil enterprise needs.

Over the past three months, the Requirements Working Group has been meeting on a regular basis to develop and agree on a set of Global Enterprise AR Requirements. The face-to-face meeting in Boston was tasked with finalizing the first phase of the Global Enterprise AR Requirements.

The Working Group included the following AREA members:

- Brian Kononchik – Boston Engineering

- Jeff Coon – PTC

- Matthew Cooney – PTC

- Dan McPeters – Newport News Shipbuilding

- Malcolm Spencer – Magic Leap

- Jeremy Marvel – NIST

- Barry Cavanaugh – MIT Lincoln Lab

- Doyin Adewodu – Infrasi

- Mark Sage – Executive Director of the AREA

The two-day workshop was a great success – and highly productive! Bringing together AREA members from all parts of the AR ecosystem (end users, hardware providers, software providers, standards organizations and academics) created a rich, diverse, focused and expert view of the Requirements needed to successfully deploy an enterprise AR solution.

The team focused on three key areas:

- Hardware Requirements

- Generic Software Requirements

- AR Use Case Requirements (based on the defined AREA Use Cases)

The first order of business was to conduct a detailed review and update of the Hardware and Generic Software Requirements that the Working Group had previously drafted. The Working Group then turned to defining the individual Use Case Requirements. Over the two days, the team succeeded in prioritizing the Use Cases and identifying a common set of requirements.

There was also an opportunity to review the updated AREA Statement of Needs (ASoN) tool, a purpose-built online capture, store, update and publish AR Requirements tool. A review of the functionality and reporting was made, and suggested improvements captured.

At the end of the event, all the participants agreed it was a very useful and informative workshop that needs to be run on a regular basis. My thanks to the attendees and the amazing team at PTC who provided the space and amazing facilities for the workshop.

Watch this space for more information about next steps and the upcoming launch of the AREA Global Enterprise AR Requirements.