Leading Water Utility in Wales Turns to AR to Reduce Errors and Improve Service

Dŵr Cymru Welsh Water (DCWW) supplies drinking water and wastewater services to most of Wales and parts of western England. A public utility with 3,000 employees serving 1.4 million homes and businesses, DCWW maintains 26,500km of water mains and 838 sewage treatment works. DCWW recently launched a pilot project to develop a mobile solution with AR capabilities to replace thousands of pages of operations and maintenance manuals. The AREA spoke recently with DCWW’s Gary Smith and Glen Peek to learn more about the solution, which they call the Interactive Work Operations Manual (IWOM).

AREA: What problem were you trying to solve with this solution?

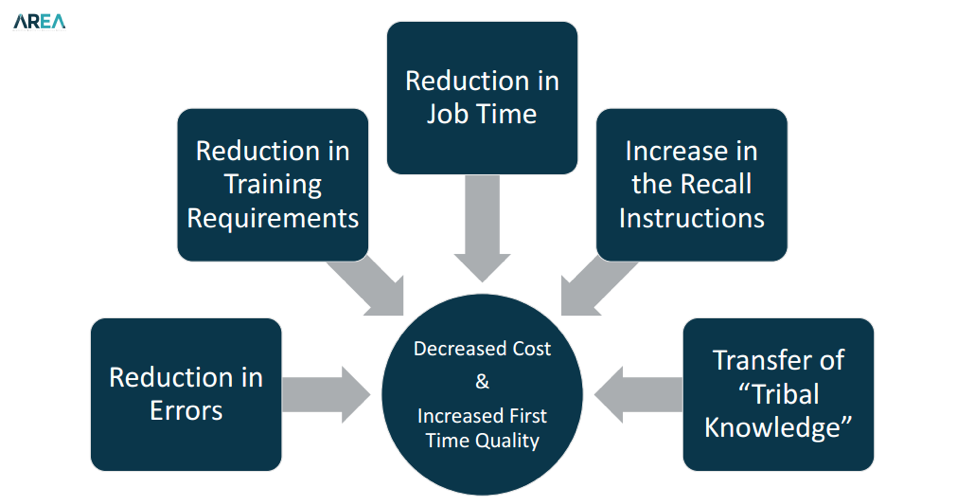

DCWW: We need to provide our operational teams with comprehensive information on how to operate and maintain our assets, which was traditionally delivered in the form of an operations and maintenance manual, which could run to thousands of printed pages. We wanted a solution that could deliver that complex information in a robust device, utilising sensing technology and augmented and virtual reality. We wanted something that could tell users their location on an asset, allow for items of equipment to be interrogated via a readable code or tag, and provide information including running state and maintenance requirements. We felt a solution like that could help users make better-informed, accurate decisions, helping to reduce errors and risk and improve customer service.

AREA: What is the IWOM?

DCWW: The Interactive Work Operations Manual is a smart, tablet-based electronic manual that can be worn or held by users, delivering complex technical information including schematics, process flows, electrical drawings, maintenance records and service requirements in a simple to use, intuitive device. The IWOM uses near field communication (NFC) and QR codes to identify equipment and presents users with information about the equipment. The IWOM is intrinsically safe so can be used in hazardous, outdoors, wet and dusty environments. It uses AR to overlay an augmented layer. This layer shows process information and instructions such as directional information for valves and switches, or ideal operating ranges for gauges and dials – information hovers in front of users and does not obstruct their view, minimising risk but enhancing what they see, helping users make the right decisions and record operating information.

The IWOM delivers this information in an interactive and visually attractive way. Site inductions include videos, highlighting areas of high hazard and daily dynamic risks. Users sign the device and these records interface with SAP so that records can be retrieved either on a site-by-site or per-user basis to help prove compliance.

AREA: How is the IWOM integrated with “lean” operating principles?

DCWW: The device automates the delivery of lean rounds and prompts for user action at the required timeframes. Users undertake rounds and record findings, which are automatically and seamlessly synched with the central lean records. This removes the need for paper records and multiple handing of the same data, thereby saving time, driving efficiency and reducing the likelihood of operator error in data transfer.

AREA: How were you able to ensure user acceptance?

DCWW: Several ways. First, we engaged with users from the start. Our development team have worked side by side with the operational teams to ensure that they developed what users want. User feedback to the initial proof of concept has been excellent and there is a real hunger now to widen the pilot scope and show what we can achieve with the smart application of the technology, driving efficiencies and increasing safety and reliability.

We also engaged with industry and emerging technology developers. The development team realised they were leading the way in the water and sewage sector in the application and use of this technology. The team have engaged with industrial and commercial sectors to share their ideas with universities and other groups, including the AREA, the British Computer Society, and the UK Wearable Technology Development Group.

During the later stages of the pilot device development, additional teams were drawn into the assessment of beta test versions and the ideas were showcased at Works Management Board, Directors Briefings, team meetings and innovation groups to measure its acceptability and usability. Without this involvement, the programme would not have been a success.

Our development team is now developing a similar version of the IWOM for the Waste Water Treatment process, and a second pilot version is under development and will be delivered before the end of March 2018.

AREA: How long did it take to develop the IWOM?

DCWW: The development has progressed from an idea generated and showcased in the spring of 2017 to a live working application developed over a nine month period and now installed on a ruggedized handheld tablet or wearable device, allowing the users to access the content from any location in a safe environment with the option of either hands-free or handheld technology.

AREA: Does the IWOM include a Digital Twin?

DCWW: Yes, the IWOM’s digital twin is a replica of our physical assets, processes and systems. In the pilot program, we digitally mapped the inside and outside of the pilot site with 3D Point Cloud laser scanning equipment and these data points were used to create a digital image. It is possible to virtually walk or fly through this digital model, allowing users to view any area of the pilot site from the tablet or remotely. One significant advantage of this process is that it enables a user to view the building, structure or an item in a plant from any location and direct an operator on the site without physically being present in the same location.

AREA: How does this tie into the Internet of Things?

DCWW: The IWOM is a driving force in Welsh Water’s IoT strategy. We are working to connect all the devices in our environment that are embedded with electronics, sensors, and actuators so that they can exchange data. The IWOM leads the way in how the IoT is being developed in the water and sewage sector.

AREA: What makes the IWOM unique?

DCWW: The development team have been able to take the best of the existing paper documentation and merge it with cutting-edge technology at a very low unit cost to deliver an intuitive product to operators in the field. For example, site inductions and dynamic risk assessments are delivered interactively and are crucial to helping reduce risk and ensure employees and visitors return home safely at the end of their working day. The IWOM is also one of a very few industrial AR applications that is entirely self-contained in a handheld device.

AREA: What benefits has the IWOM delivered?

AREA: What benefits has the IWOM delivered?

DCWW: The IWOM resolves the need to reproduce complex operating manuals in a paper format. Updates to the operational manuals are presently delivered by a team of CAD technicians who transcribe the design data into a rendered 3D digital format rather than stripping out detail to produce a simple 2D printed image. It’s much more efficient. Also, by eliminating paper manual updates, we’ve saved the operators the trouble and error associated with manually removing and replacing pages. The IWOM is updated centrally and an electronic copy pushed to users so they always have the most up-to-date version of the manual at the point they need it.

AREA: How easy is it to replicate the IWOM?

DCWW: The IWOM has not been resource intensive. Much of the development has been done in-house, by enthusiastic skilled amateurs and in the team’s own time in the evening and weekends. Welsh Water purchased a tablet computer to display the output and secured a “two for one” deal on the wearable device so we have kept the total hardware costs down. A development partner was secured by tender to help us design the bespoke software and they were so committed to the project that they contributed to the development costs. Companywide implementation would require a bespoke package to be developed for each site. We believe we can develop these in-house as our skill set grows, and rather than place the software package on a bespoke device on each site, we believe we can host it on our own servers. That would mean that the information on any asset where an IWOM had been created would be available to any user with the right credentials and user access.

AREA: How can our readers learn more about your work?

DCWW: Welsh Water is presenting at the April 2018 Welsh Innovation Event, the STEM event in February, Smart Water 2018, the Wearable Technology Conference, and at a hosted seminar for the British Computer Society in the autumn of this year.

AREA: What would like to say to AREA members and the broader AR ecosystem?

DCWW: The IWOM development team would like to share their ideas with others. We want to continue to explore what other industries are doing in this area and share best practices. In particular, we would like to hear from other developers in the AR, IoT and haptic fields of expertise.

Gary Smith is Head of IMS and Asset Information, Glen Peek is WOMS Manager, and Ben Cale is the Data Analyst at Dŵr Cymru Welsh Water.”

CES 2018 also revealed some of the gaps that need to be filled for the AR movement to accelerate. First, “the world is seriously devoid of AR talent,” as Jim Heppelmann noted. Secondly, the nature of spatially-based visuals requires complex, high-resolution objects to be delivered to the user. These are generally too large and dynamic to be contained within static apps on a local client and thus need to be web streamed live. The developer community needs to establish protocols for real-time AR asset streams as it has done for web VR in the past.

CES 2018 also revealed some of the gaps that need to be filled for the AR movement to accelerate. First, “the world is seriously devoid of AR talent,” as Jim Heppelmann noted. Secondly, the nature of spatially-based visuals requires complex, high-resolution objects to be delivered to the user. These are generally too large and dynamic to be contained within static apps on a local client and thus need to be web streamed live. The developer community needs to establish protocols for real-time AR asset streams as it has done for web VR in the past.